Visual Design Intuition

predicting dynamic properties of beams from raw cross-section images

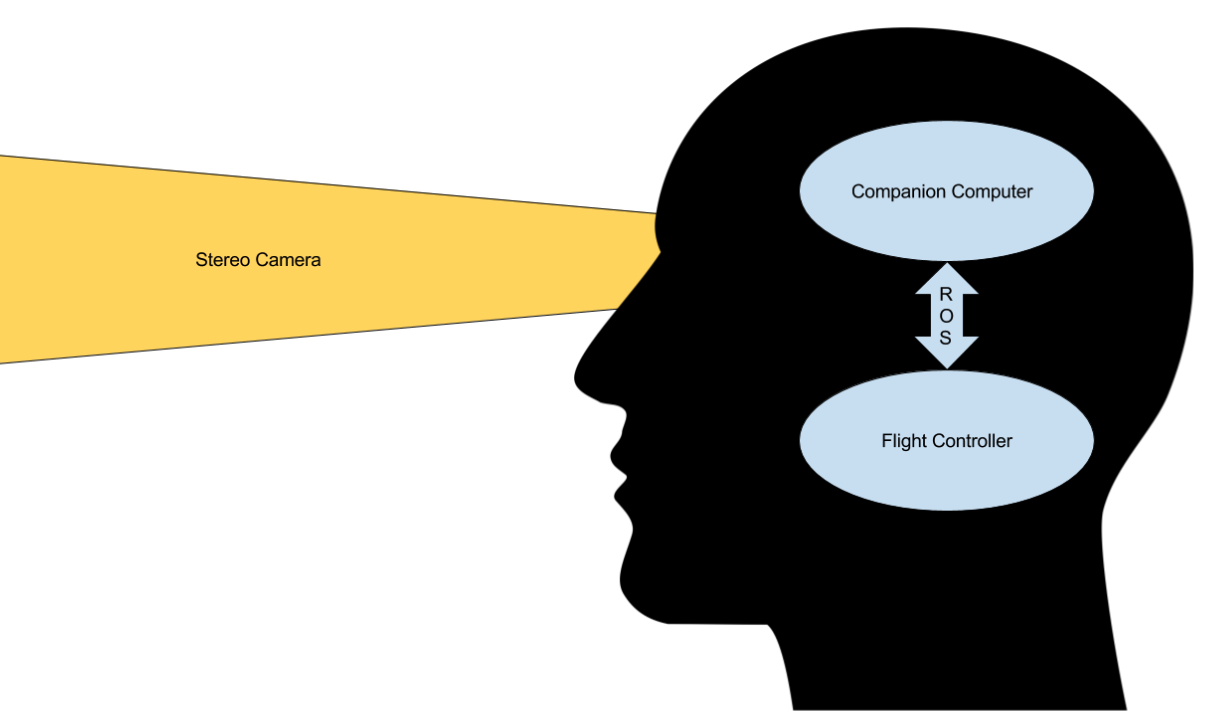

Can machines mimic visual intuition?

An artistic representation of a humanoid robot contemplating the design of a bridge generated using Dalle-2

In this work we aim to mimic the human ability to acquire the intuition to estimate the performance of a design from visual inspection and experience alone. We study the ability of convolutional neural networks to predict static and dynamic properties of cantilever beams directly from their raw cross-section images. Using pixels as the only input, the resulting models learn to predict beam properties such as volume maximum deflection and eigenfrequencies with 4.54% and 1.43% mean average percentage error, respectively, compared with the finite-element analysis (FEA) approach. Training these models does not require prior knowledge of theory or relevant geometric properties, but rather relies solely on simulated or empirical data, thereby making predictions based on ‘experience’ as opposed to theoretical knowledge. Since this approach is over 1000 times faster than FEA, it can be adopted to create surrogate models that could speed up the preliminary optimization studies where numerous consecutive evaluations of similar geometries are required. We suggest that this modeling approach would aid in addressing challenging optimization problems involving complex structures and physical phenomena for which theoretical models are unavailable.

Wyder PM, Lipson H. Visual design intuition: predicting dynamic properties of beams from raw cross-section images. Journal of The Royal Society Interface. 2021;18(184):20210571. doi:10.1098/rsif.2021.0571